ETL using AWS Glue

Basic Idea

ETL is the core task of Data Engineers. Extracting Data from various sources whether it is streaming data or historical data, then transforming it into suitable form such that it can be used as per business requirements. Finally, data is loaded into suitable storage space.In this blog we will see the ETL pipeline using AWS Glue.

AWS GLUE

Aws Glue is a fully managed serverless ETL pipeline services. Serverless means that developers or users can build ad run applications without having to manage servers. It is totally managed by AWS and follows pay as you use kind of facilities. AWS is use to prepare and transform data for analytics and other processing tasks. It simplifies the process of data cleaning, data transformation into desired format and so on.

Let's understand the architecture of AWS Glue.

AWS GLUE ARCHITECTURE

Data Stores can be anything depending upon the use case i.e. S3, Redshift etc. We load our data in s3 for further processing. Crawler on other hand crawls through the folders of data stores and generates the schema and stores the metadata in Glue Data Catalog. Not only from data stores, but data catalog can also have meta data from various sources including streaming data source as well.

Data source uses data catalog for getting data obtained from data store. Also, for data transformation as well, the pipeline relies on the metadata from the glue data catalog. Well this clarifies the importance of maintaining meta data in data catalog. However, data target for storing the transformed data is not directly dependent on data catalog although glue data catalog can still provide some value when working with targets for storing transformed data.

Some Common Terminologies in Glue

Data Store

Data stores is a storage location where the source data is stored in AWS Glue.

Data Source

Data source is a dataset or data itself that is within the data store.

Data Target

Data target is where the final transformed data is stored.

Crawler

Crawler does the same task as it sounds. It is use to crawl the dataset in data store to generate schemas of the data automatically and identify data format, relation between data etc and stores the metadata in the data catalog.

Simple ETL Pipelining Walkthrough in AWS Glue

Make a source and destination folders in s3 bucket.

Navigate to AWS Glue and Create Crawler where source data location should be the one, we created above. Also, database should be created while setting up the crawler, which is helpful for quering the dataset using SQL.

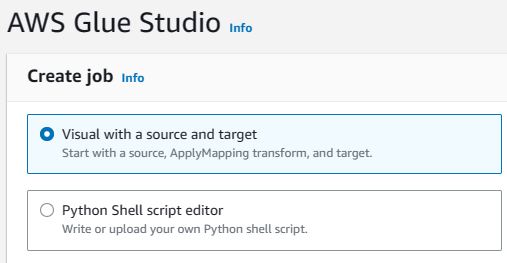

Next navigate to jobs under AWS Glue studio, for simplicity select "Visual with Source and Target" and click Create.

Finally, we will see following diagram

Select the Data catalog table option and continue for next block for target where the location folder is already made initially.

The transformation block has default mapping only, we can adjust, add, delete and perform lots of tasks.

Finally click on Run

Conclusion

Hence, AWS Glue is a powerful tool for ETL pipelining. AWS Glue can be used with various other AWS services as well for making it even more powerful, like with Hudi, AWS Lambda etc.

Comments

Post a Comment