Docker With PostgreSQL

Brief Introduction to Docker

Docker is a platform as a service (PaaS) for developing, shipping and running applications in containers.

By containers, i mean, a lightweight, portable and self-sufficient unit capable of running applications and their dependencies. It is a runnable instance of a Docker image. Docker container runs inside a host machine but completed isolated from host machine.

Importance of Docker

Some of the importance of docker are highlighted as:

Dependency Management

As data engineers we work on various pipelines and applications. So, to simplify the dependency and make sure we don't end up with conflict across different environment, it is necessary to encapsulate the dependencies of applications in a container and generate an image for it.

Portability

Docker containers are portable and can be easily moved between different environments, which makes it simpler to deploy and share pipelines and applications across teams or organizations easily.

Environment Consistency

While working with various tools, databases, and dependencies. Docker allows us to package their applications along with all the required dependencies into containers, ensuring consistent environments across development, testing, and production.

Time Saving:

It would be time consuming to set up all the necessary environment and dependency for running applications for testing, debugging etc. while docker container automates this process enabling developers to focus more on building and optimization.

Running containers in docker

In this blog post, we will explore some essential Docker commands to gain familiarity with how containers actually work. Before proceeding, ensure that Docker is installed on your device. To check if Docker is running correctly, execute the following command:

docker run hello-world

You should observe a message from Docker indicating successful execution. Additionally, you can run an Ubuntu image with an interactive terminal:

docker run -it ubuntu bash

The -it flag signifies an interactive terminal. Congratulations, you've successfully run your first container in Docker!

Now, let's drive into running a PostgreSQL container in this blog.

To run Postgres with Docker we shall run a container based on PostgreSQL 13 image with specific config and settings:

docker run -it \

-e POSTGRES_USER="admin" \

-e POSTGRES_PASSWORD="a" \

-e POSTGRES_DB="ny_taxi" \

-v d:/DockerBlog/ny_taxi_pg_data:/var/lib/postgresql/data \

-p 5432:5432 \

postgres:13

docker run: This command is used to run a Docker container.

-it: This flag ensures the container runs in interactive mode and connects the terminal to the container's standard input.

-e POSTGRES_USER="admin": Sets the PostgreSQL username to "root".

-e POSTGRES_PASSWORD="a": Sets the password for the PostgreSQL user to "root".

-e POSTGRES_DB="ny_taxi": Creates a PostgreSQL database named "ny_taxi".

-v d:/DockerBlog/ny_taxi_pg_data:/var/lib/postgresql/data: Mounts a volume from the host machine at d:/DockerBlog/ny_taxi_pg_data to the container's /var/lib/postgresql/data directory. This allows the PostgreSQL data to be persisted on the host machine.

-p 5432:5432: (port forwarding) Maps port 5432 on the host machine to port 5432 on the container, allowing external access to the PostgreSQL database.

postgres:13: Specifies the Docker image to use for the container, in this case, PostgreSQL version 13.

After this PostgreSQL container should run in your terminal. Now to get instance of PostgreSQL terminal , we need a package i.e. pgcli

pip install pgcli

After installation of pgcli we shall run following command in terminal:

pgcli -h localhost -p 5432 -u admin -d ny_taxi

now a popup should appear asking for password which in our case is "root" which we set while running PostgreSQL container above.

then we get:

Now let's ingest data in PostgreSQL. For this we shall use a python script (ipynb):

I have downloaded NY taxi data from website below:

FYI,: This is not an optimum code but will be enough to get our job done. Yop will get an error after while loops ends but its fine.

upload_data.ipynb

import pandas as pd

from sqlalchemy import create_engine

taxi_data = pd.read_csv('yellow_tripdata_2021-01.csv')

taxi_data.head()

engine = create_engine('postgresql://admin:a@localhost:5432/ny_taxi')

engine.connect()

# checking schema

print(pd.io.sql.get_schema(taxi_data,name="taxi_Data_table",con=engine))

# for obtaining column names

taxi_data.head(n=0).to_sql(name='yellow_taxi_data',con=engine,if_exists='replace')

# since data is of large size we break it ito chunks

df_iter =pd.read_csv('yellow_tripdata_2021-01.csv',iterator=True,chunksize=10000)

while True:

taxi_data=next(df_iter)

taxi_data['tpep_pickup_datetime']=pd.to_datetime(taxi_data['tpep_pickup_datetime'])

taxi_data['tpep_dropoff_datetime']=pd.to_datetime(taxi_data['tpep_dropoff_datetime'])

%time taxi_data.to_sql(name='yellow_taxi_data',con=engine,if_exists='append')

print('inseretd another chunk')

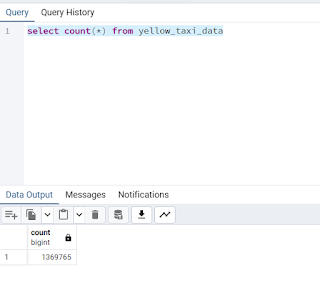

After the code is executed, go to the terminal where we used pgcli to connect to PostgreSQL and type

\dt

\dt

select count(*) from yellow_taxi_data;

Well, congrats, we successfully inserted data in our database. But working with cli is quiet hard, so lets shift to GUI for more interactive environment.

Now before diving into this, we have to know that GUI PostgreSQL and PostgreSQL will be running on two different containers, so we have to link the containers via pg-network so, let's stop all the containers and link the GUI.

# for postgres server

docker run -it \

-e POSTGRES_USER="admin" \

-e POSTGRES_PASSWORD="a" \

-e POSTGRES_DB="ny_taxi" \

-v d:/DockerBlog/ny_taxi_pg_data:/var/lib/postgresql/data \

-p 5432:5432 \

--network=pg-network \

--name pg-database \

postgres:13

# for GUI

docker run -it \

-e PGADMIN_DEFAULT_EMAIL="admin@admin.com" \

-e PGADMIN_DEFAULT_PASSWORD="root" \

-p 8080:80 \

--network=pg-network \

--name pgadmin \

dpage/pgadmin4

then in browser run and login to:

now let's set up the server, you can follow the steps below:

Step1:

Conclusion

In summary, we successfully set up Postgres using Docker containers, making use of both graphical and command-line interfaces for PostgreSQL. We uploaded data and ran SQL queries, highlighting the practical benefits of Docker. This small project demonstrated the importance of Docker, and there's even more it can do, like setting up Docker files and using Docker Compose. These additional features help us keep a consistent environment for testing and debugging. Overall, this project shows how Docker simplifies development processes.

We will see all of there in next blog, stay tuned!!!!!!!!😊😊

Comments

Post a Comment